Searching across vast, federated enterprise data is a significant challenge. In an environment like Netflix's, with hundreds of UIs and diverse domains, users faced a steep learning curve, needing to master a complex Filter Domain Specific Language (DSL) to find the information they needed. To break down this barrier, Netflix's engineering team leveraged AI, specifically Large Language Models (LLMs), to build a system that converts natural language questions into structured Graph Search filter statements. This post is based on the source material detailing their approach and key insights.

The Core Challenge: Syntactic, Semantic, and Pragmatic Correctness

When an LLM converts natural language to Graph Search Filter DSL, it must guarantee correctness on three levels:

- Syntactic Correctness: Does the generated statement follow the DSL's grammar?

- Semantic Correctness: Does the statement use only fields that exist in the index and values that are permitted? (Preventing hallucination)

- Pragmatic Correctness: Does the generated filter accurately capture the user's true intent?

The key strategy to ensure semantic correctness was applying a RAG (Retrieval-Augmented Generation) pattern separately to both fields and controlled vocabularies.

Context Optimization Strategy Using RAG

Providing all hundreds of fields and thousands of controlled vocabulary values to the LLM is inefficient and reduces accuracy. Netflix refined the context using a two-stage RAG approach.

| Target | Method | Purpose |

|---|---|---|

| Field RAG | Embed field names, descriptions, and types, indexing them in a vector store. Perform similarity search between question chunks and embeddings to select Top-K fields. | Include only question-relevant fields in the context, reducing noise and improving accuracy. |

| Controlled Vocabulary RAG | Embed controlled vocabulary values (display names, descriptions, synonyms), indexing them. Search for values similar to the question to provide as allowed values for relevant fields. | Prevent LLM value hallucination and infer additional related fields. |

This process resolves each question into an optimized combination of relevant fields and values.

Building User Trust and Pragmatic Strategies

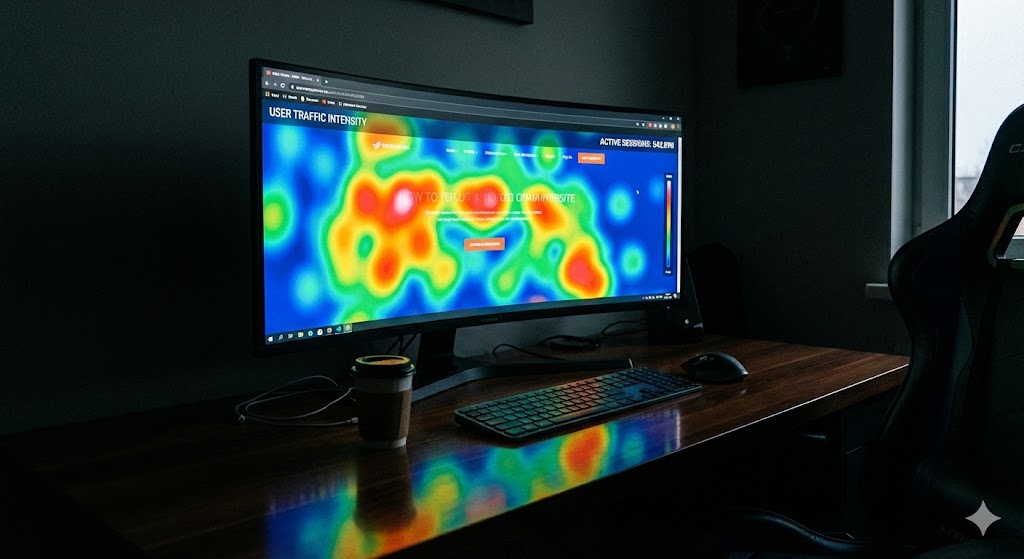

To tackle the hardest problem—pragmatic correctness—Netflix introduced two practical UI/UX strategies.

- Showing the Work: Instead of displaying the complex raw DSL query, the system parses it into an AST (Abstract Syntax Tree) and visualizes it as 'Chips' or 'Facets' in the user interface. Users can intuitively see how their question was interpreted into filter conditions and make adjustments.

- Explicit Entity Selection (@Mentions): When a user mentions a specific entity like

@dark, the system bypasses the RAG inference step and hardcodes that controlled vocabulary into the context. This removes ambiguity and simultaneously boosts accuracy and user trust.

Conclusion: This system is evolving beyond a simple translator into an extensible platform. It represents a best-practice case study combining the flexibility of LLMs, the robustness of existing Graph Search infrastructure, the accuracy gains from RAG patterns, and user-centric feedback mechanisms. It's an essential reference for any team looking to integrate AI into complex enterprise search systems.