Automating large-scale software maintenance with AI agents is a powerful vision, but it comes with significant risks. An agent generating incorrect PRs or, worse, code that passes CI but contains functional bugs, can quickly erode trust in the entire automation system. At Spotify, we tackled this by designing for predictability first and foremost. This post explores the core strategy that makes it possible: the Verification Loop. You can find the detailed source in the original engineering blog.

![]()

Designing Verification Loops to Preempt Failure Modes

We identified three primary failure modes for unsupervised coding agents:

- Failure to produce a PR: A minor annoyance.

- Producing a PR that fails CI: Frustrating for engineers, who must then decide whether to fix the half-broken code.

- Producing a PR that passes CI but is functionally incorrect: The most serious error, as it undermines trust and is hard to catch in reviews.

To prevent modes 2 and 3, we implemented a multi-layered verification loop. This allows the agent to incrementally confirm it's on the right track before committing to a change.

Deterministic Verifiers and the LLM Judge

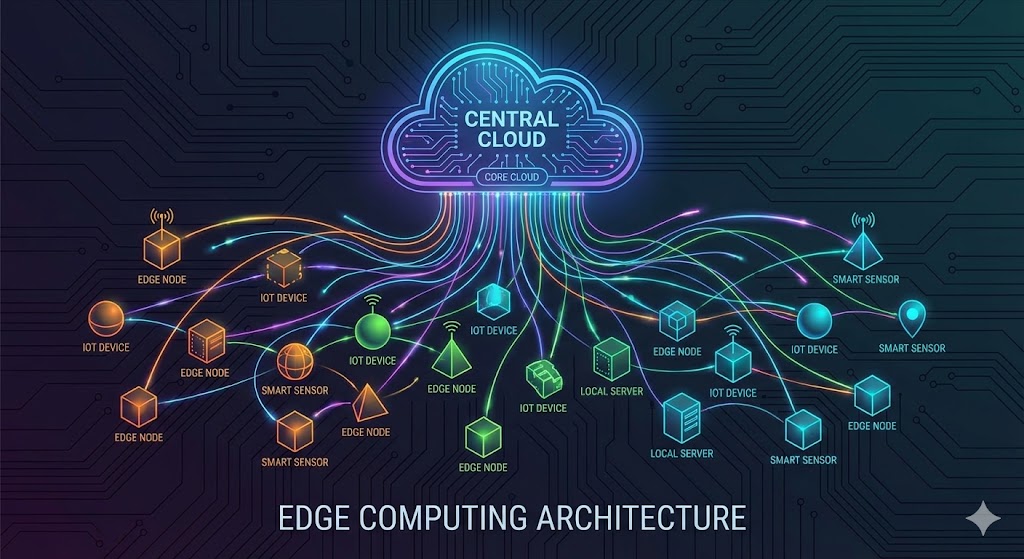

The verification loop consists of two key components:

- Deterministic Verifiers: These are tools abstracting specific build/test systems like Maven (triggered by

pom.xml), Gradle, formatters, and test runners. The agent simply calls a generic 'verify' tool, and the verifier handles executing the actual commands and parsing the output. This saves the agent's context window and offloads tedious tasks like complex log parsing. - The LLM Judge: While verifiers catch syntactic and build errors, the Judge catches semantic errors. It evaluates whether the agent strayed outside the prompt's scope (e.g., unnecessary refactoring) or chose an incorrect solution. Internally, the Judge vetoes about a quarter of thousands of agent sessions, with the agent successfully self-correcting half the time.

Design Philosophy for Predictable Agents

The high reliability stems from a philosophy of keeping the agent hyper-focused on a single purpose.

| Design Principle | Description | Expected Outcome |

|---|---|---|

| Limited Access | Only has permissions to read/write code and call verification tools. Pushing code, Slack comms are handled by surrounding infrastructure. | Increased predictability, improved security. |

| High Sandboxing | Runs in a container with limited binaries, minimal access to external systems. | Reduced security risk, consistent execution environment. |

| Abstracted Tool Interface | The agent calls an abstracted 'verify' tool, not a 'Maven verify' tool. | Reduces agent's cognitive load, improves maintainability. |

This design prevents the agent from getting 'creative' and keeps it faithful to its mission. The key insight is that you are deploying not just an agent, but a system that controls the agent.

Conclusion: Implications for Engineering Practice

Spotify's case study reframes AI agents from mere code generators to engineering subjects that require robust control systems. The conditions for success are clear:

- Build Fast Feedback Loops: Start by implementing deterministic verifiers so the agent knows immediately if it's going astray.

- Add a Semantic Validation Layer: Beyond syntax checks, a process like an LLM Judge is needed to validate the intent matches the outcome.

- Restrict the Agent's Role: A specialized tool designed for a specific job is more predictable than a general-purpose one.

The field is evolving rapidly, with future challenges including hardware/OS expansion, deeper CI/CD pipeline integration, and robust evaluation systems. If you're applying AI to large-scale code transformations, paying close attention to this feedback loop architecture is crucial.